Yesterday (on a Sunday) I coaxed some of my friends to run the first trial for my master thesis with me.

It was a warm and sunny fall day and I forced all of them to sit in the basement with me to play around with robots and machine learning. At this point, I’d like to thank them all again for doing this with me. I’ll be forever grateful.

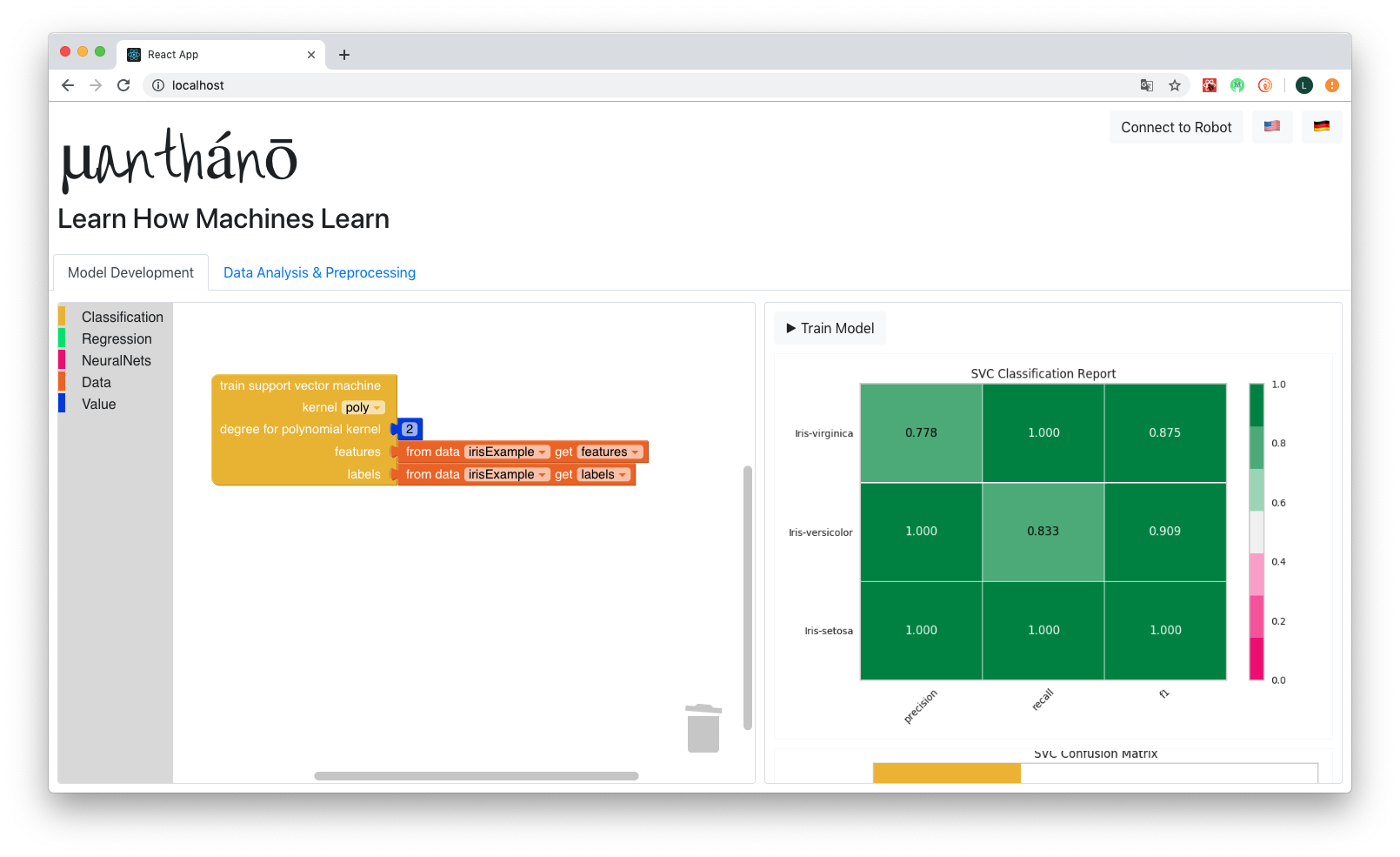

We started the day with some Mate, Coffee, a 1.5-hour long introduction to machine learning and some remarks for the following practical part. The practical part consisted of the digit classifier task I’ve talked about in a previous blog post. Only this time all participants were able to work with the web application µanthánō, that I’ve been working on for the past three months.

In groups of two the participants had to train machine learning models to make their robot classify all digits from 1 to 9 correctly. Before the workshop I assembled the robots, setup the SD cards with the necessary programs to drive over the digits and collected training/test data that they could use and extend. This allowed for 50% of the participants having no computer science background whatsoever, which was necessary to prove my main objective for this thesis: create an environment, where anyone’s able to get a deeper understanding of the world of machine learning.

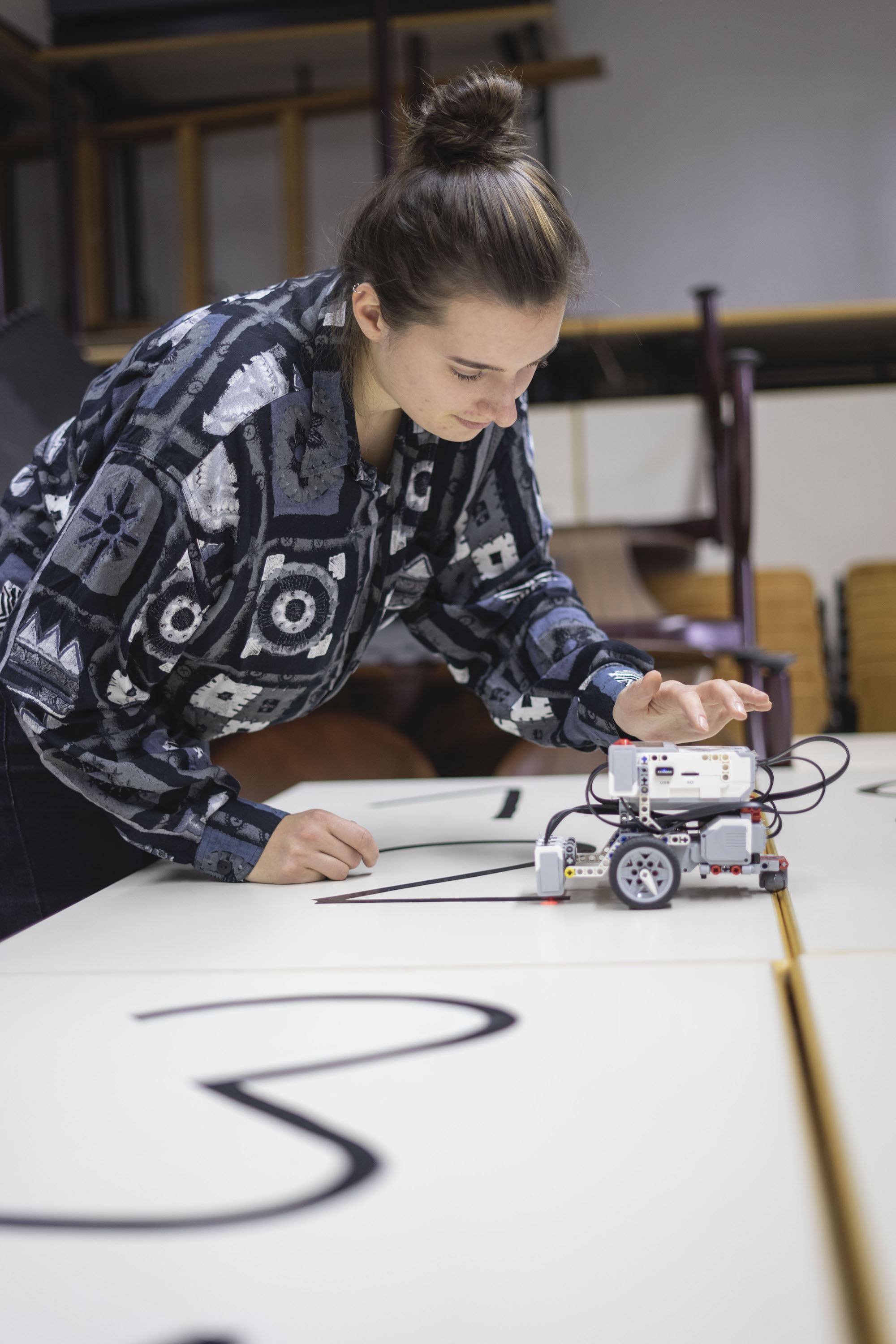

Each team approached the task differently. Most of them started with testing the different algorithms immediately, two teams started with collecting more training data and one team thoroughly studied the PDF (in german), that had more information on all the algorithms, before they started testing them. All the teams had about 2 hours to complete this task before we came together and talked about how the different models performed on the task and how to improve the performance.

Antonia collecting more data

Antonia collecting more data

Marlene and Franz discussing their results

Marlene and Franz discussing their results

Most of the teams identified Support Vector Machines and Decision Trees as the algorithms that performed best on the task which was the expected result. Moreover, they were able to correctly identify the disadvantages of algorithms like K-Nearest Neighbours and Multilayer Perceptron. They also figured that the data quantity was a problem for almost all algorithms, which is entirely my fault, as I didn’t collect enough data the week before the workshop.

Then I asked the participants how they felt about their learning success and what they considered to be good or bad about the workshop. Their learning success should be assessed on a scale of 1 to 5, 1 being great learning success and 5 being negative learning success (more confused than before). Respectively, 2 stands for good learning success, 3 stands for slight learning success and 4 stands for no learning success. Three of the five participants with a computer science (CS) background rated their learning success a 4, which is not surprising considering that most of them had prior experience in machine learning. One of them rated their learning success a 3 and one rated his a 2. As for the participants without any prior knowledge of CS and machine learning, two of the five rated their learning success a 2.5 and the rest a 3.

As for criticism, I condensed all of it and only specify the background of a participant from time to time.

The one thing that almost all participants identified as negative was the structure of the workshop as they found the introduction part too long and heavy. This was a grand didactic failure on my part. It should’ve been clear to me that it’s not a good idea ever to flood people with information on machine learning for 1.5 hours. Also some participants identified parts of the information as unnecessary to the underlying task, which I have to revisit for the next workshop. Related to this is the criticism that the learning objective was not defined clearly in the introduction. Three of the ten participants liked the introduction and found it adequate. Two of those three had a background in CS and one didn’t.

As for the practical part, five participants put a lot of emphasis on how much they liked it. Three participants evaluated the practical part in a negative way for different reasons. One non-CS participant found the task frustrating, since the results of all models weren’t good. Another non-CS participant was a little confused by the results and found the testing of different models a bit random. A CS participant questioned the selected features for the task and found this default restrictive.

The centerpiece of my master thesis, the web application, was well received. All participants used the interface very intuitively and there were hardly any additional questions regarding its use. Only smaller sources of disturbance were identified, which were mainly of technical nature and only identified by CS participants.

Lastly, working with robots in the practical part was perceived as highly motivating and the consensus was that the workshop was a lot of fun.

Bobbie having fun

Bobbie having fun

In November I am going to give two more 1.5-hour long workshops for high school students and a hands-on workshop at a conference. I will definitely use the conducted feedback to improve the experience in the upcoming events. We’ll see if I actually keep my promise to myself this time and write about these events on here. Until then, toodles!